Troubleshooting for Slow or Large Models

Last updated on 2025-11-07

Overview

The performance of a model in xP&A, i.e. the speed loading or calculating it, depends on various factors:

- Model size (cell count mainly depends on number of time steps, number of dimension items, number of variables, number of scenarios)

- Complexity of the formulas (e.g. a sumif formula is slower than a simple addition)

- Complexity of the visuals (charts and tables)

In this chapter, we provide some ideas how slow model performance can be tackled.

This article contains the following sections:

Using the Model Inspector

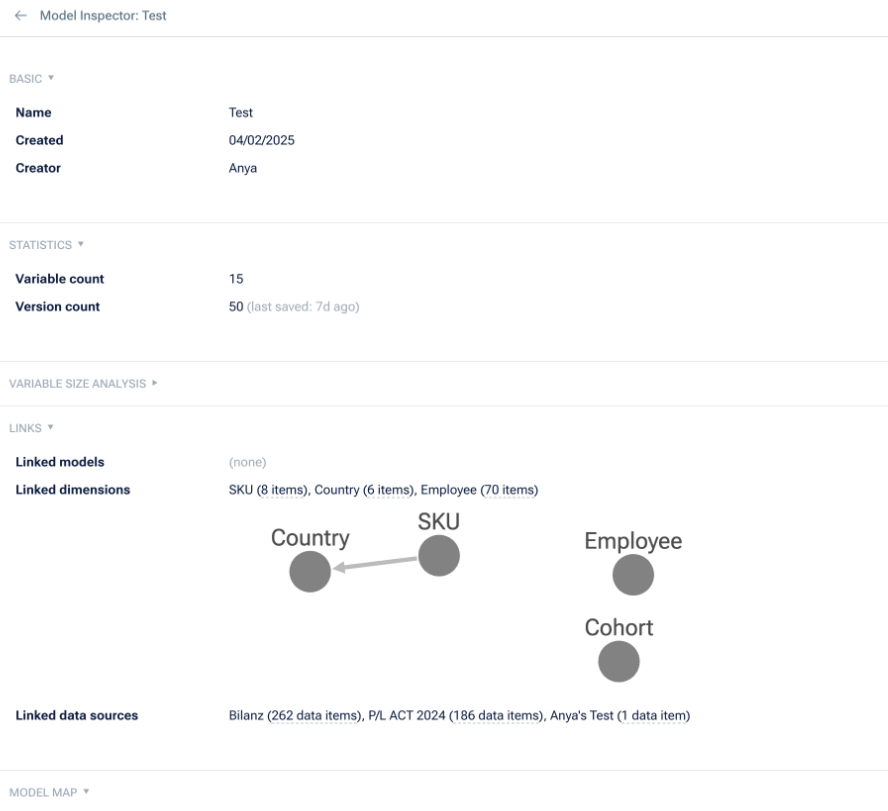

To investigate a model's size (and which linked models, and variables within models, are the biggest culprits), you can use the Model Inspector.

To open the Model Inspector, simply press i on your keyboard when you are in a model (with no variables selected) and the following dialog will be shown:

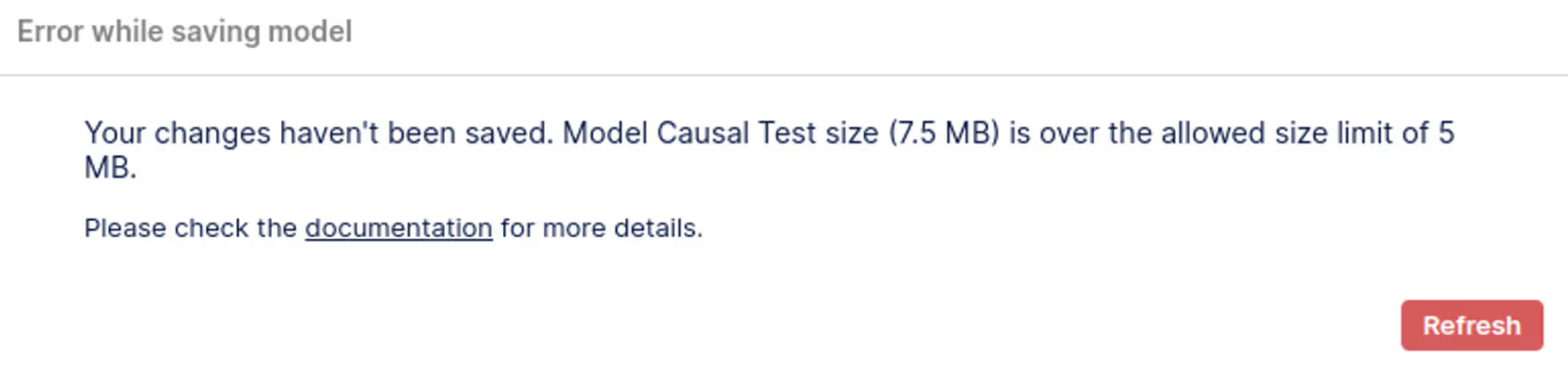

Storage Limit

If you see the below error message, you have breached the storage limit allowed for the model. Please follow the steps above to go to the Model Inspector and pay particular attention to the Variable Size Analysis section.

This will show the largest variables in the model so you know which variables to target in reducing size.

Model size warning

Model size warning

Other Possible Solutions

If the speed and/or performance of your models slow, consider the following options:

- Turn on Manual recompute. This means you can make multiple edits to the model, recompute to evaluate them all at once, and save to persist your changes.

To do so, open the model settings, and activate Recompute and save manually under Calculation. - Hide the visuals pane (top-right of spreadsheet), and/or consider deleting unused visuals, or moving them to a separate model.

- Consider reducing the model granularity and/or the length of your model, in the time settings.

- If you are using dimensions (including cohorts):

- Consider if you need the dimension on every variable in your model(s), or just some variables. You can aggregate away the dimension for the variables you don’t require the breakdown on.

- Consider if you can reduce the number of dimension items in the dimension (e.g. grouping all smaller dimension items into "other" instead of having them each as separate items).

- If you have variables that have a specific value/formula for each dimension item (or multiple overrides within the variable), this will make the model larger as xP&A needs more storage space. Consider if you need this, or could use one aggregate formula instead, or use a dimension item in an if-statement (allowing you to dynamically define a variable's dimensional values/formulas without requiring a separate formula for each dimension item).

- With if-statements, consider if you really need a nested if-statement (i.e. if X then Y else if A then B else [...]), or if you can use multiple if statements in the same formula (i.e. if X then Y else 0 + if A then B else 0 + [...]). The latter is a lot simpler and quicker to compute as the if-statements are separate.

- If you have one big model, consider splitting it up into smaller sub-models. You can move variables to make these changes easily.

- Consider removing scenarios that are no longer in use.

- If your model is connected to a data source, and the data source is large (either because of its granularity, its dimensionality, etc), then consider aggregating data in the data source instead of in xP&A.